OpenAI is set to produce its first in-house AI chip next year in partnership with U.S. semiconductor giant Broadcom, the Financial Times reported, citing people familiar with the matter.

According to the sources, the chip will be used internally to power OpenAI’s systems rather than being offered to external customers. The ChatGPT maker relies on substantial computing power to train and operate its models.

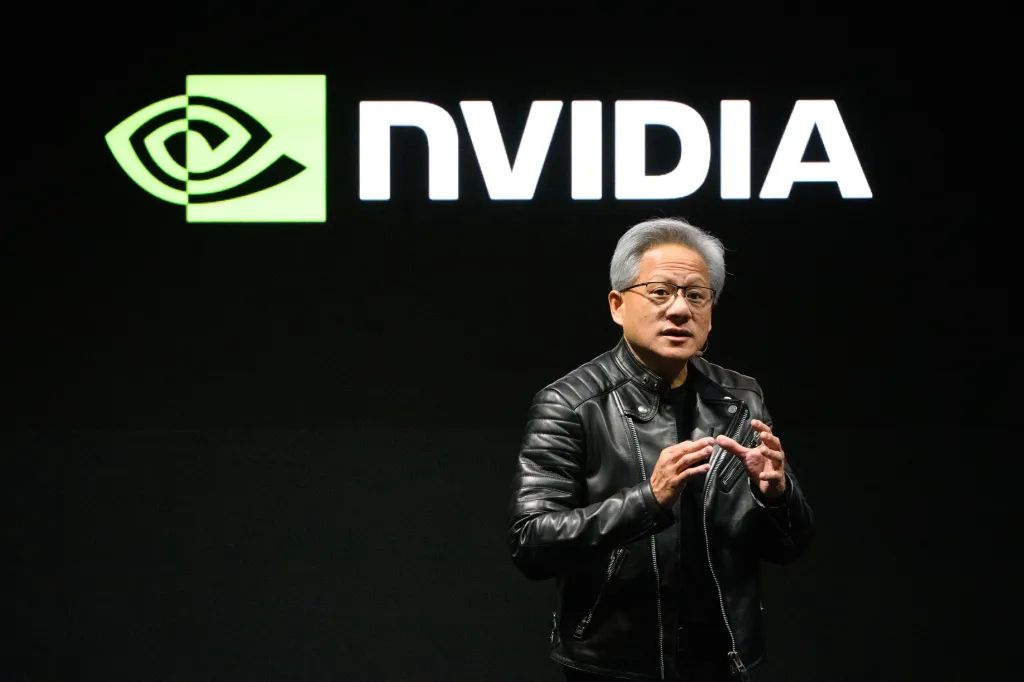

Earlier reports revealed that OpenAI has been collaborating with Broadcom and TSMC to design its first internal chip, while continuing to use AMD and Nvidia processors to meet the surging infrastructure demand. This year, the company was close to finalizing the chip design and sending it to TSMC for fabrication, as part of a long-term plan to reduce reliance on Nvidia and lower costs.

Hock Tan, CEO of Broadcom, announced that the company has received over $10 billion worth of AI infrastructure orders from a new client, without disclosing the name, adding that revenues in this sector will see strong growth in fiscal year 2026.

OpenAI’s move aligns with a global trend led by Google, Amazon, and Meta, all of which are developing custom AI chips amid the soaring demand for computing power.